Cats made AI

The inside story

Neal Stephenson makes this connection between brains and computers:

"When you first acquire a computer, it has a BIOS program in it that enables it to recognize and use a floppy drive, a hard drive, a keyboard, and a screen. But the BIOS doesn't do anything really interesting—it just provides a foundation on which other layers of software can be built. A human being is born with a brain that has certain basic abilities—the ability to breathe, to eat, to make noise, to flail limbs—but that doesn't mean that the person can speak or build things or play games. The higher-level functions only come into play later, after experience has accumulated."

Baby brains might need to learn a few things, but they’re still better than the best computers we’ve got. We might not know how our brains work, but we know they’re amazing. Billions of neurons, millions firing at any time, create hundreds of trillions of connections all running on less power than a lightbulb.

That baby is going places.

We’d like computers to be more like our brains and there are increasing similarities between the two. Machine Learning is the big breakthrough that powers AI. In ML, computers learn patterns from data without explicit programming. Kinda like how we learn from experiences.

10 years ago, the internet had more cat videos than should exist. So many. Back then, a computer couldn’t tell a cat from a dog from a squirrel from a garbage truck. So we tried a new machine learning approach that replicates the brain’s structure, called neural networks.

An early neural net, trained on cat videos, learned how to recognize a cat. It watched 10 million YouTube videos over the course of three days and then, all of sudden, it knew what a cat looked like. AI was off to the races, thank you cat lovers.

Just like we don’t know how our brains work, we don’t know how computers recognize cats - only that they learned. This is an evolution of the same computer systems we built to beat humans at our own games.

Even if we don’t know how any of this works, we can go back to Neal’s comparison of computers and brains, and figure out how we got here.

Computers are made up of two things: hardware + software. How’s that like your brain? Your brain is the hardware and your experiences are the programming or software. Computers use circuits and programming to tie them together, your brain uses neurons and synapses to make connections. They both run on electricity.

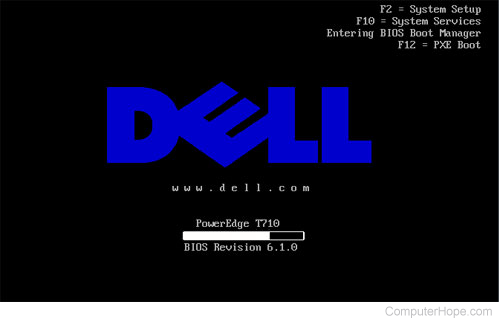

What’s this first software a computer loads - BIOS? Your computer is a useless pile of metal and plastic until BIOS or Basic Input Output System loads. It’s the equivalent of connecting your brainstem to the rest of your body to keep your heart beating and enable your reflexes.

IBM invented the first BIOS to light up their PC. Ironically, it was the only thing IBM created directly for their PC - everything else - the processor, the memory, the operating system they outsourced. Notably, Intel provided the processor or CPU, Microsoft the OS. The Wintel duopoly was born.

Phoenix Technologies reverse-engineered IBM’s BIOS, clearing the final hurdle for revenge of the clones, and the PC revolution was off, emerging from the ashes of the mainframes.

Computers need a few more layers of software before you can do anything with them. A little shim program called a boot loader finds an Operating System to run and drivers to access the computers memory, and the rest of its hardware. Once the Operating System loads you’ve got the functional equivalent of a Brain that’s conscious and ready to learn and do.

You program your brain with experiences and that takes a long time. Years to develop eye-hand coordination, speech, reasoning, not to mention higher level achievements like playing the piano or dunking a basketball or inventing neural nets.

Computers gain capabilities through ever more sophisticated programming.

I coded Lunar Lander on the HeathKit 3400 in low level software called assembly or machine language. Each entry was a basic instruction like storing a number in a memory register, adding two numbers, etc. Higher level languages like BASIC, FORTRAN, C, C++, would soon come along so you could code more complicated things. Compilers translate these higher level languages back down into machine language.

We needed a way for computers to talk to each other for more sophisticated tasks, so we built interfaces called APIs. We got better and better at this. Faster processors, bigger memory, more powerful APIs and development environments.

This model took us pretty far, but it was a pitiful attempt at the brain. Sequential processing was the bottleneck. No matter how fast they get, traditional CPUs can only do one thing at a time, serial and stepwise. Move this number to this register in memory, add it to another, next. That’s not the way your brain works; you see a cat and a million neurons fire to rescue that mouse.

Painting pixels on screens was the breakthrough that required parallel processing. Screens got bigger, gaming got going, graphics broke out. That’s how NVIDIA got their start - they created graphics hardware and invented the first GPU. To paint millions of pixels simultaneously you need a different architecture than serial processing. You need massive parallelism, like our brains.

Now we’re getting closer to how our brains work. Using GPUs rather than CPUs for parallel processing, we now can have computers work a problem from multiple angles at once, creating a neural network of the answers. It’s takes a lot of different perspectives to recognize a cat. Thank goodness for cat videos. After cats, we started training neural nets with larger datasets and more power, more scale.

Transformer architecture uses parallel processing to break problems apart and solve them faster. By building those on top of neural networks we get the AI Large Language Models we use today. Neural nets and transformers all run on massively parallel compute clusters, powered by NVIDIA GPUs.

This is a big step change in computing and it’s why NVIDIA won and Intel lost. The GPU was repurposed for AI while Intel stuck with their outdated CPU architecture.

There is one more piece to the NVIDIA magic called CUDA. CUDA is a software interface or API for NVIDIAs GPUs. Remember how hardware is nothing without software? CUDA enables developers to write in their favorite language like C++ and let the GPUs figure out how to run it.

This has turned into a three part series. Part I covered how computing scaled in Let’s Get Physical. Today, cats enabled the change from serial to parallel processing, so we can build software based on how our big brains work. I’ll fill in the missing parts next week - notably the mobile/cloud wave which ties these two together.

It’s a lot easier to connect dots looking backwards, but it might give us an idea of what will happen next.

Best, Andrew

I have a love/hate relationship with this post. Love the computing history, the great. simple definitions of things like the BIOS. I am a dog person though, believe cats are the spawn of Satan, and this post has given me appreciation for cats and cat videos.

Sacrilege

I don't *think* Stephenson got me thinking about computers = brains. I was around 20 or 21 when I first read his stuff, and I suspect Asimov, Clarke, and other sci-fi classics had probably already done most of that heavy lifting... but Stephenson did such a great job of explaining how something like that might happen. I think that was the truly notable thing about his work for me.