Talking to the Wizard behind the curtain

Understanding how all of a sudden we can talk to computers

In the not so distant future you’re going to be talking to one of our AI overlords and you’re going to wonder, how did this happen?

It’s not so odd, if you know just a bit about the wizard behind the curtain. So today we’re going to go through the three basic components behind how all of a sudden we can talk to computers - and they can talk back.

Lets talk

Originally the only way you could interact with an AI model was through text, typing into a chatbot like ChatGPT. Soon AI models became multi-modal which is a fancy way of saying you can throw pictures at them now as well as words.

But the game changer here is computers we can talk to.

Check out this clip of ChatGPT’s new advanced voice mode:

For more proof, how about 30 seconds of AI sarcasm.

To figure out how all this works, lets start with grandma.

Talking to Grandma

I used to be all kinds of an expert in grandma video calls. It was the cross-generational scenario in Skype - you’re on one side of the country or the world, grandma is on the other side and it’s really nice to see and talk to her. Skype was the first platform to make voice and video calls between computers easy. Zoom and Teams and FaceTime and every other video conferencing system you’ve ever used is built on this same stuff.

First we take what’s going on in the real world - Grandmas voice and her face - and translate it into something a computer can deal with. Grandma’s voice is a sound wave. To convert a sound wave - we measure it a bunch of times per second and assign each sample a numeric value. We package these values up into computer bits and bobs, send them down the wire at the speed of light to a piece of software on the other side that unpacks them back into Grandma’s voice.

Video uses the same principal but instead of sound waves we translate grandmas face into a series of images broken out into red/green/blue values that are decoded on the other side and re-assembled into a video stream of grandma.

Like a secret decoder ring, we’re encoding stuff on the one side and decoding it back on the other. The core computer component doing the work here is called a CODEC which is short for CODer ←💍→ DECoder. Grandmas voice and image goes in one side, travels around the world and comes out the other side looking and sounding pretty much the same.

I bet you’ve been on your share of crappy internet voice and video calls but this technology is now solidly in the solved column and while incremental improvements will continue, lets build on it by talking to a dog.

Talking to Rex

Seeing and talking to grandma, where the computer is just a go-between is a lot easier than talking directly to the computer. Grandma gets you! How do we get a computer to understand you? The next thing we have to solve is speech recognition. The first time we did this was in 1922, meet Radio Rex:

Radio Rex understood one word - REX! An electromagnet keyed to the audio frequency of an adult male saying Rex yeeted this little clay bulldog out of his house. Same idea as the Captain Crunch whistle that launched a generation of Phreaks 50 years later.

Speech recognition by computers has come a long way in 100 years. ASR or Automatic Speech Recognition is now extremely accurate, can extract voices from background noise and understands most languages. Like encoding and decoding grandmas voice, similar techniques like breaking words down into their component phonemes, mapping acoustics to words and modeling language patterns all go into creating a system that enables a computer to figure out the words you speak. I’m not an expert in any of this but it’s not magic, it’s math.

Rex and Grandma still can’t talk to each other

But we’re getting close. We can convert speech into something a computer can process and we can get a computer to map that speech into words. But it’s not enough to know the words - what do they mean when you string them together? Turns out, this is the hardest trick to solve.

Ambiguity is one problem. For example, what does this sentence mean?

Stolen painting found by tree.

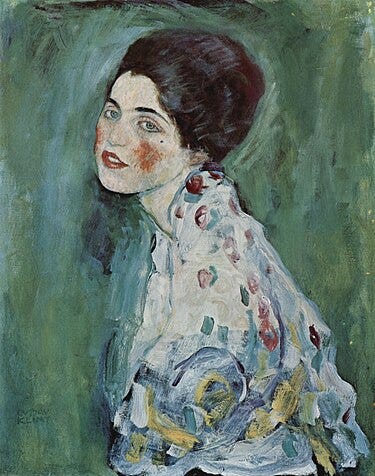

This was the headline for the story of Gustav Klimt’s ‘Portrait of a Lady’ believed stolen and then found years later by a gardener tucked in a panel behind a tree.

In that context the sentence means:

By a tree, a stolen painting was found.

However, it could also mean:

A tree found a stolen painting.

The meaning depends on whether the prepositional phrase ‘by tree’ at the end of the sentence modifies the noun ‘painting’ (first meaning) or it modifies the verb ‘found’ (second meaning).

Language is full of ambiguities like this; it is not enough to just understand the meaning of the words.

Speech is a hot mess. In addition to ambiguity, add in idioms and expressions, sarcasm and tone, and not to mention language is constantly evolving and changing.

This is the last piece of the puzzle and it goes by our third and final acronym for today - NLP or Natural Language Processing. NLP is, without a doubt, the hardest part of getting computers to understand what we are saying.

NLP’s nascent technical maturity is built atop the same breakthrough advances that led to AI - training systems on massive amounts of data and incorporating human feedback loops.

Complex Systems

Thanks for sticking with me through Grandma, Rex and the Tree - I hope it makes sense and knowing a little about what’s going on behind the curtain helps you get the most out of this new tech without worrying about it too much.

Our modern world is full of complex systems like this, not just in technology but other fields and institutions. If you have questions about this system let me know in the comments, and if there’s a complex system you know a thing or two about I’d love to hear about that too 👇🏻

best, Andrew

I'll admit it. I never thought about looking behind the curtain. Now my mind is blown.

Can you imagine 25 years from now? I'll be in a box then, but I can't imagine. The speed of the tech innovation is mind blowing...