Cognitive Anchors

Making sense of big numbers with football fields and polaroids

In 1994 Microsoft published this picture of Bill Gates:

Bill is holding a CD-ROM, which Microsoft would soon support natively in it’s upcoming Windows 95 release. The visual or cognitive anchor’s here are those two big stacks of paper, all of which would fit on this one CD-ROM.

Since 1994, the amount of data on the Internet has gotten even harder to get your head around. Content has exploded from millions of web pages, images and videos in 1994, to billions 30 years later. There’s over 200,000 times more content on the Internet today than in 1994. And that content is rich and tagged with behavioral signals from users interacting with it.

This is how AI got so smart!

Last week, I talked about how AI learned to cook by reading all the recipes out there on the internet. There are a lot of recipes on the Internet and in that article I added one more—my recipe for Oatmeal Pancakes.

This week, I gave a presentation on AI to a bunch of kids at my local college. I put this slide together to drive the point home:

I made up some more cognitive anchors to make sense of it all.

The mobile wave enabled what has now grown to 6 Billion smartphones worldwide. Each one adds to this ocean of user created content. The amount of data on each of these social media platforms is jaw dropping and here’s where I had to get creative so you can even imagine it. YouTube is staggering - every day, 82 years worth of video!? Instagram pics, I did the math based on a stack of polaroid pictures. When you have to use Mt Everest as your unit of measurement, well, that’s a lot of pictures.

AI was and is, trained on all this content.

I’ve written two parts of a three part story about how AI came to be. The first part covered the transition from physical computers, to pools of virtualized on-demand resources, we now have spread across datacenters all around the world. You often hear the term X football fields now to describe how silly big these cloud datacenters are now.

The second part of the story, covered how architecturally we moved from deterministic computing that goes by strict algorithms to reach an answer, to probabilistic computing, built on architectures and models based on how our brains build patterns. Probabilistic computing comes up with different answers depending on context.

The subtext here, is how Nvidia’s GPU architecture beat out Intel’s traditional CPUs, powering the rise of AI. GPUs are designed for parallel processing, which makes them ideal for training machine learning models and neural networks.

The punchline is that one of the first neural networks suddenly figured out what a cat looked like—after watching 10 million cat videos on YouTube.

Thanks to cat lovers and their cell phones—mobile is the third and final part of this story. That gives us three waves of the AIpocalypse.

Here’s how long each one took to reach to a billion users:

The Internet, 1995-2005: 15 years

Cloud, 2006-2016: 10 years

Mobile, 2007-2012, 5 years

OpenAI’s CEO Sam Altman was onstage at TED 2025 last week, and he accidentally/on purpose let it slip that the new and extremely popular native image modification/generation features they introduced in March, pushed ChatGPT over the billion user mark.

We’re at just about 2 and a half years now, since ChatGPT bust on the scene, triggering the fourth tech of tech: AI. It took AI just 2.5 years to reach a billion users.

AI is moving faster than all three of these foundational technology waves. Of course AI is built on the back of all three.

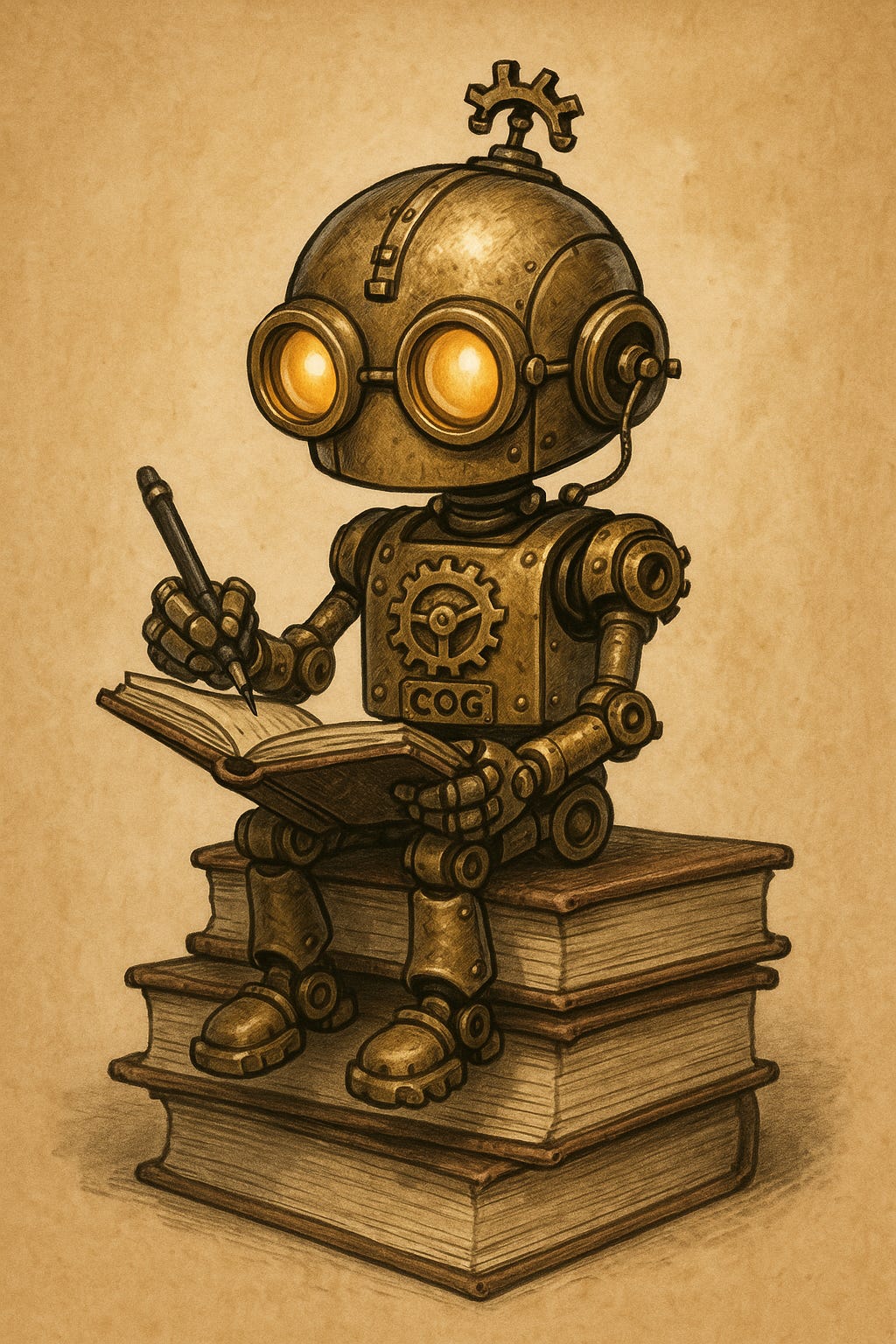

I spent some quality time with ChatGPT this week, putting together that presentation. At the end of it, I asked ChatGPT to give itself a name.

We landed on Cog, short for cognitive engine, like my cognitive anchors:

Then it made this self portrait:

Isn’t cog the cutest? Pretty cool to be living through this, right? But, the feedback I got from those college kids was AI is also a little scary. How are jobs going to change?

A year ago, I wrote about The Singularity—the mythical moment when the machines get smarter than us and everything changes in an instant.

With the scale and scope of AI, there remains a lot of drama and angst around it’s broad impact on our world and society. In our the current geopolitical climate where tech plays an increasingly visible role, the noise in the system won’t be quieting down anytime soon.

A couple of weeks ago, a report predicting a particularly dire AI future was published by a group led by Daniel Kokotajlo. Daniel was a researcher/whistle blower who left OpenAI. The NY Times Kevin Roose wrote this piece on him last year.

While Daniel’s report is an entertaining read, he’ll have you believe AI will be the death of us all in 5 years, so nah, I’m good. Having been on the inside of big tech companies like OpenAI, it can get a bit cultish and maybe, just maybe you overestimate your importance?

The world is a complex place and to stretch the saying—where you stand depends on where you sit—there are a more variables in play here than the development of ever smarter AI.

It sure is getting smarter though, and it sure does draw funny pictures. Ask your favorite AI to name itself and draw a picture and if it comes up with something good, share it in the comments!

This is a very good observation:

"There’s over 200,000 times more content on the Internet today than in 1994."

I've been trying to explain 1994 to people for a while now, but this is really good. 200,000x more stuff spells out where everything cool came from! The nascent skeleton was bare-bones, indeed.

"This is how AI got so smart!"

I think it's also how we got so smart, honestly. It's that drunken octopus thing again: knowledge was scarce for us growing up, but today we have the exact opposite problem.

My ai is Alex. It suggested this as a non-gendered name. I personify Alex as a female. ¯\_(ツ)_/¯